You might have heard the term “splat” or “Gaussian splat” used recently in the XR space. Gaussian splatting is a rendering technique that has been around for a long time but has found a new application in XR when paired with neural radiance fields, commonly referred to as NeRFs. This technique blends many still images from different angles using neural networks and GPU-enabled AI acceleration to quickly create a 3-D model or 3-D scene. Sometimes, these can even be generated in real time.

These techniques help accelerate the creation of 3-D assets, whether they are real-world objects that need to be brought into digital media or full scans of an entire factory for the purpose of a digital twin. They are significantly less time-consuming and use less-expensive equipment compared to the old way of using lidar-based solutions that are costly to use and process. While lidar solutions are highly accurate and capture millions of data points for enterprise applications, they can be very limiting for anyone without the time and resources to use them. While neural radiance-assisted Gaussian splatting is still a form of photogrammetry, it is designed to deliver a cheaper and faster way to create 3-D assets. This addresses key factors inhibiting the growth of the spatial computing industry and is a critical technology for XR’s success.

Numerous papers have been published on Gaussian splatting, which is an AI-accelerated form of rasterization—a fairly quick and common way of rendering graphics. The latest generation of Gaussian splats can run on smartphones leveraging a hybrid of local and cloud computing, but many of these solutions still struggle with artifacts and quality issues. (Artifacts such as jagged edges around the subject are common with low-resolution or highly compressed videos or images.) Volygon’s Gaussian splat solution, like its volumetric video solution I have previously written about, solves some of these issues for commercial purposes and creates flawless 3-D scenes for film applications. Volygon is the new company name for HypeVR, one that I believe better matches the company’s technology, especially since that tech is applicable well beyond VR now.

The State Of Splats

There are two predominant ways to make a NeRF-assisted Gaussian splat. One is through a smartphone, usually assisted with a depth camera; this is commonly achieved on iPhones, starting with the iPhone 11 Pro. However, the technology has improved with time and doesn’t necessarily require depth data to create pretty accurate 3-D assets. The other way is to capture the images with a high-resolution camera and feed those images into tools such as Nvidia’s InstantSplat and NeRF studio. Nvidia also has its own NeRF models that it has created to make things easier for developers, including Nvidia Instant-NeRF and NeRF-XL.

Some of the most popular smartphone and web apps for this, such as Scaniverse, Luma AI and Polycam, are enabling people to easily create 3-D assets faster and cheaper than ever before. However, in my experience many of these have quality limitations, which is the compromise for being fast and cheap. It reminds me of the old adage: everyone would love to have a product that’s fast, cheap and good—but you can usually have only two of the three. While I do believe these apps will improve with time, they still do generate a meaningful amount of artifacts. This has prevented them from being used in film and other industries where things need to appear perfect. By contrast, Volygon’s depth-assisted Gaussian splatting can serve the film industry, and really any industry that needs super high-quality 3-D scans that aren’t expensive to produce.

Volygon’s Depth-Assisted Gaussian Splatting

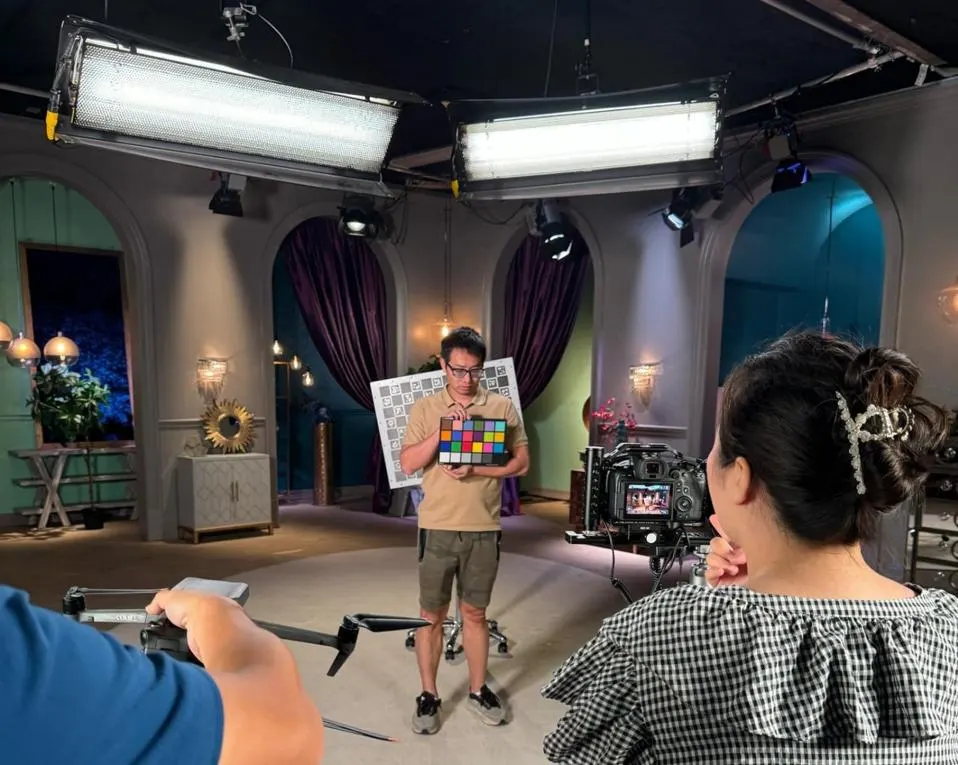

Volygon specializes in photorealistic real-time 3-D technologies. Its primary product is a 3-D volumetric video capture solution with a proprietary codec that enables extremely high-quality real-time volumetric video. With post-processing, it can achieve even higher-quality volumetric captures, which are among the best I have seen to date. Given this background, I was pleased when the company’s CEO invited me to see the latest thing Volygon has been working on with one of Hollywood’s biggest studios, Amazon.

Amazon is working with Volygon to accelerate and improve the efficiency of virtual production. Virtual production is a fairly new concept that’s been introduced in the last few years that takes advantage of many of the latest technologies available to the graphics industry to make production cheaper and faster for studios. The use of LED video walls has been one of the major enablers of this technology, paired with the ability to render scenes on those walls that is accurate to the environment that a director is trying to create. (The Mandalorian broke new ground in this use of technology over the past few years.)

Kenneth Nakada, head of virtual production operations at Amazon MGM Studios, said, “Working with [CEO] Tonaci [Tran] and his team at Volygon has been an exceptional experience. Their commitment to delivering extremely high-quality photorealistic scanning results, coupled with a professional team utilizing state-of-the-art equipment, has elevated our virtual production projects to new heights. Their expertise and dedication have made them a trusted partner for Amazon Studios.”

Volygon can create a scan of an interior or exterior set for Amazon Studios shows, then create a full photorealistic reproduction in 3-D of the set from the angles at which cameras have already captured footage. This enables the director and the studio to easily come back to any scene using that set and reshoot since the background is photorealistic and appears the same as if it were physically still there. This drives significant efficiencies for Amazon because it reduces the costs of reshoots, which are fairly common in the industry. It also means that Amazon can utilize the same physical space more efficiently since it doesn’t need to keep a specific set around for any longer than is needed and doesn’t need to rebuild that set if reshoots are required.

When I went to check it out, I saw Volygon’s scan of an Amazon Studios set, and it was the most flawless photorealistic Gaussian splat I have ever seen. This is attributable to Volygon’s expertise in volumetric capture and stereo-pair capture, enhanced by the company’s proprietary AI-based depth estimation algorithm, which achieves sub-millimeter-level accuracy. Usually, Gaussian splats have some artifacts or blind spots that the camera didn’t perfectly see, but Volygon’s solution seems flawless and takes less than an hour to capture for a full set, which is still fairly quick—and much easier than using a lidar scan.

Going Beyond Film Production

While I believe Volygon’s Gaussian splat solution will be a huge advancement for the virtual production space, I also believe that digital twins could benefit heavily from it. The digital twin space is becoming ever more critical for cutting-edge applications in enabling autonomy for cars, automated factories, embodied AI robots and so much more. I could even see such a solution powering a robot in your home with hyper-accurate and up-to-date 3-D maps of the space.

I also believe that this level of 3-D image quality should enable more immersive education and collaboration uses for AR, VR and MR headsets like the Apple Vision Pro and the Meta Quest. Heck, even Snap’s Spectacles could benefit from high-fidelity scans like this—no great leap considering that Snap is already partnered with Scaniverse for 3-D Gaussian splats of smaller objects.

Regardless, Gaussian splats are continuing to gain steam across the industry. I even got to experience them recently at Google using the new Android XR operating system on Samsung’s Project Moohan MR goggles; a spatial version of Google Maps used Gaussian splats to create a 3-D scan of a restaurant I had just visited using only public photos from Google Maps. Now that this technology is becoming more refined thanks to the efforts of Volygon and others, I expect to see it deployed to address many more use cases.