Intel just continued the execution of its aggressive “five nodes in four years” strategy with the launch of the Xeon 6P (for performance) CPU and the Gaudi 3 AI accelerator. This launch comes at a time when Intel’s chief competitor, AMD, has been steadily claiming datacenter market share with its EPYC CPU.

It’s not hyperbolic to say that a successful launch of Xeon 6P is important to Intel’s fortunes, because the Xeon line has lagged in terms of performance and performance per watt for the past several generations. While Xeon 6E set the tone this summer by responding to the core density its competition has been touting, Xeon 6P needed to hit AMD on the performance front.

Has Xeon 6P helped Intel close the gap with EPYC? Is Gaudi 3 going to put Intel into the AI discussion? This article will dig into these questions and more.

Didn’t Intel Already Launch Xeon?

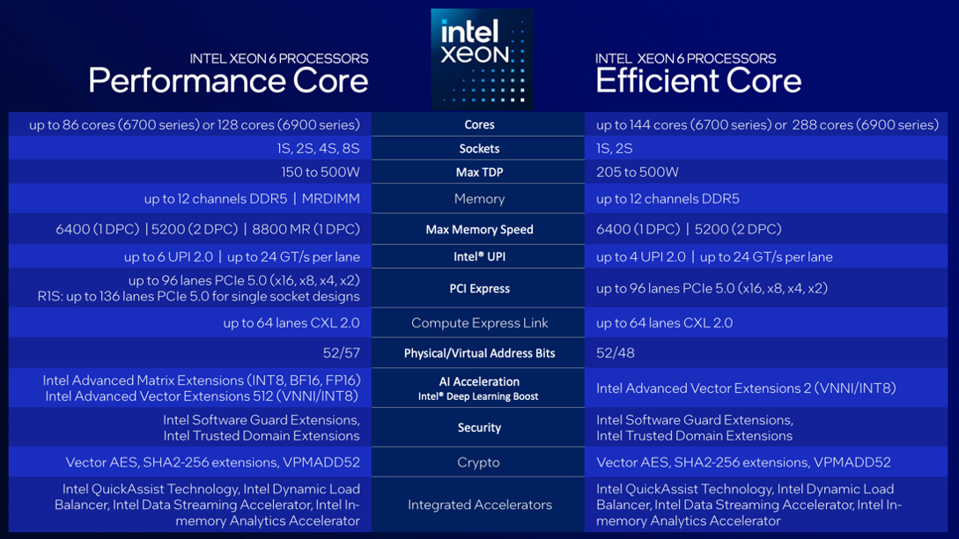

Yes and no—and it’s worth taking a moment to explain what’s going on. Xeon 6 represents the first time in recent history that Intel has delivered two different CPUs to address the range of workloads in the datacenter. In June 2024, Intel launched its Xeon 6E (i.e., Intel Xeon 6700E), which uses the Xeon 6 efficiency core. This CPU, codenamed “Sierra Forest,” ships with up to 144 “little cores,” as Intel calls them, and focuses on cloud-native and scale-out workloads. Although Intel targets these chips for cloud build-outs, I believe that servers with 6E make for great virtualized infrastructure platforms in the enterprise.

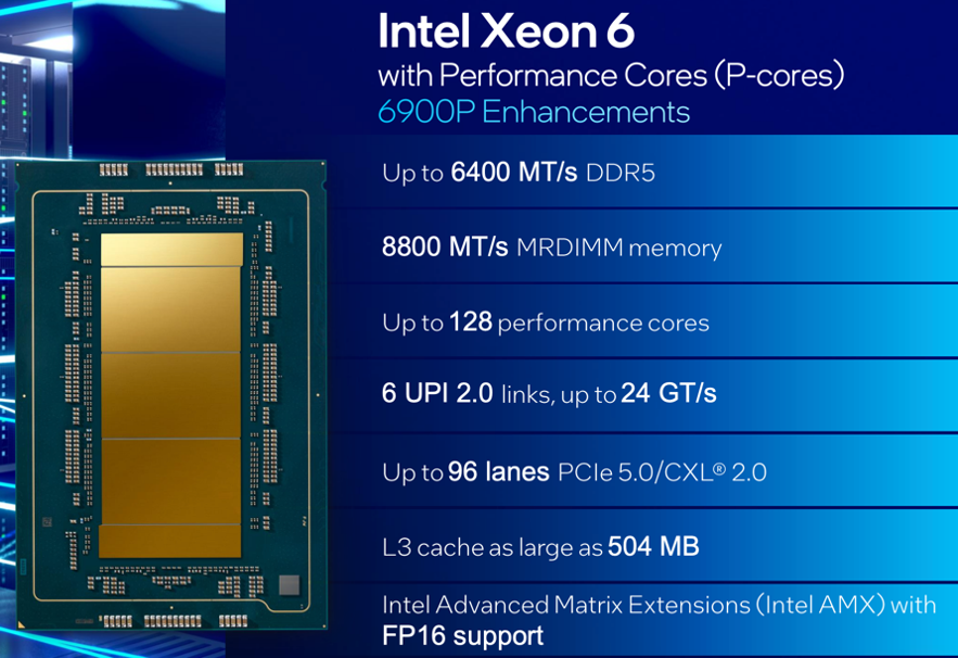

In this latest launch, Intel delivered its Xeon 6P CPU (Intel Xeon 6900P), which uses the Xeon 6 performance core. These CPUs, codenamed “Granite Rapids,” are at the high end of the performance curve with high core counts, a lot of cache and the full range of accelerators. Specifically, Xeon 6P utilizes Advanced Matrix Extensions to boost AI significantly. This CPU is Intel’s enterprise data workhorse supporting database, data analytics, EDA and HPC workloads.

The company will release the complementary Xeon 6900E and 6700P series CPUs in Q1 2025. The 6900E will expand on the 6700E by targeting extreme scale-out workloads with up to 288 cores. Meanwhile, the 6700P will offer a lower level of performance Xeon with fewer cores and less rich cache. It is still great for enterprise workloads, just not with the extreme specs of the 6900P.

Effectively, Intel has launched the lowest end of the Xeon 6 family (6700E) and the highest end (6900P). In Q1 2025, it will fill in the middle with the 6700P and 6900E.

The other part of Intel’s datacenter launch was Gaudi 3, the company’s AI accelerator. Like Xeon 6, Gaudi 3 has been talked about for some time. CEO Pat Gelsinger announced it at the company’s Vision conference in April, where we provided a bit of coverage that’s still worth reading for more context. At Computex in June, Gelsinger offered more details, including pricing. The prices he cited suggest a significant advantage for Intel compared to what we suspect Nvidia and AMD are charging for their comparable products (neither company has published its prices). However, for as much as Gaudi 3 has already been discussed, it has only now officially launched.

What’s Inside Xeon 6P?

Xeon 6P is a chiplet design built on two processes. The compute die, consisting of cores, cache, mesh fabric (how the cores connect) and memory controllers, is built on the Intel 3 process. As the name implies, this is a 3nm process. The chip’s I/O dies are built on the old Intel 7 process—at 7nm. This process contains PCIe, CXL and Xeon’s accelerator engines (more on those later).

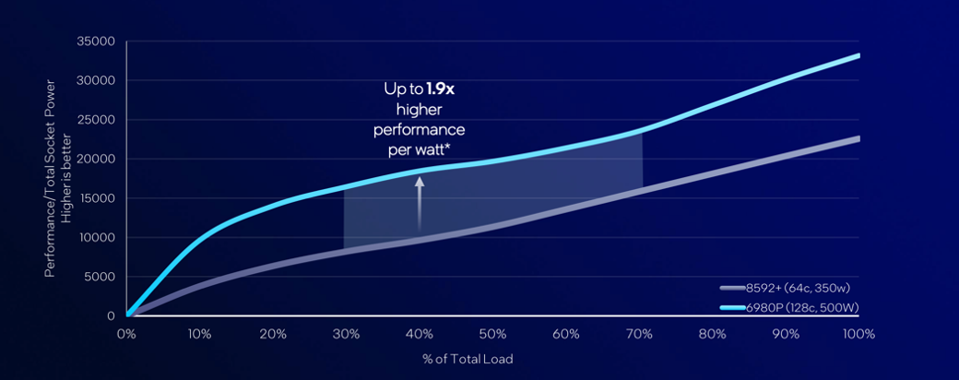

The result of the process shrink in Xeon 6 is a significant performance-per-watt advantage over its predecessor. When looking at the normal range of average utilization rates, Xeon 6P demonstrates a 1.9x increase in performance per watt relative to the 5th Gen Xeon.

Intel’s testing compared its top-of-bin (highest-performing) CPU—the 6890P with 128 cores and a 500-watt TDP—against the Xeon Platinum 8592+ CPU, a top-of-bin 5th Gen Xeon with a TDP of 350 watts. Long story short, Intel has delivered twice the cores with a roughly 7% increase in per-core performance and a considerably lower power draw per core.

It’s what’s inside the Xeon 6P that delivers a significant performance boost and brings it back into the performance discussion with its competition. Packed alongside those 128 performant cores is a rich memory configuration, lots of I/O and a big L3 cache. Combine these specs with the acceleration engines that Intel started shipping two generations ago, and you have a chip that is in a very competitive position against AMD.

When looking at the above graphic, it may seem strange to see two memory speeds (6400 MT/s and 8800 MT/s). Xeon 6P supports MRDIMM technology, or multiplexed ranked DIMMs. With this technology, memory modules can operate two ranks simultaneously, effectively doubling how much data the memory can transfer to the CPU per clock cycle (128 bytes versus 64 bytes). As you can see from the image above, the bandwidth increases dramatically when using MRDIMM technology, meaning that more data per second can be fed to those 128 cores. Xeon 6P is the first CPU to ship with this technology.

I point out this memory capability to give an example of the architectural design points that have led to some of Intel’s performance claims for Xeon 6P. Despite what some may say, performance is not just about core counts. Nor is it simply about how much memory or I/O a designer can stuff into a package. It’s about how quickly a chip can take data (and how much data), process it and move on to the next clock cycle.

Does Xeon 6P Deliver?

When I covered Intel’s launch of its 4th Gen Xeon (codenamed “Sapphire Rapids”), I talked about how I thought the company had found its bearings. This was not because of Xeon’s performance. Frankly, from a CPU perspective, it fell short. However, the company designed and dropped in a number of acceleration engines to deliver better real-world performance across the workloads that power the datacenter.

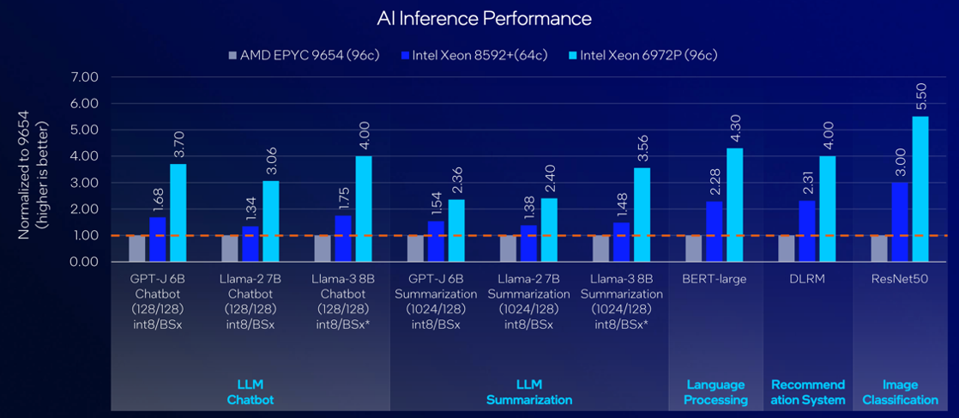

The design of Xeon 6P, building on what Intel introduced with Sapphire Rapids, sets it up to handle AI, analytics and other workloads well beyond what the (up to) 128 Redwood Cove cores can handle. And frankly, the Xeon 6P delivers. The company makes strong claims in its benchmarking along the computing spectrum—from general-purpose to HPC to AI. In each category, Intel claims significant performance advantages compared to AMD’s 4th Gen EPYC processors. In particular, Intel focused its benchmarks on AI and how Xeon stacks up.

As I say with every benchmark I ever cite, these should be taken with a grain of salt. These are Intel-run benchmarks on systems configured by its own testing teams. When AMD launches its “Turin” CPU in a few weeks, we’ll likely see results that contradict what is shown above and favor AMD. However, it is clear that Intel is back in the performance game with Xeon 6P. Further, I like that the company compared its performance against a top-performing AMD EPYC of the latest available generation, instead of cherry-picking a weaker AMD processor to puff up its own numbers.

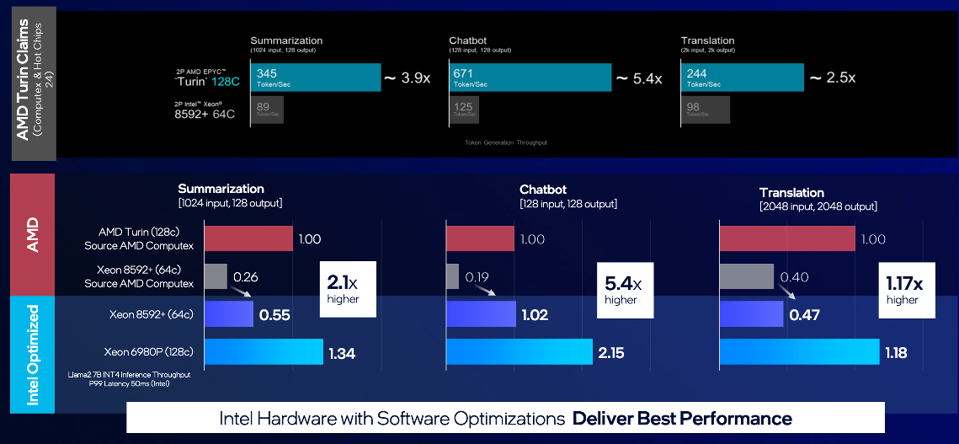

One last note on performance and how Xeon 6P stacks up. In a somewhat unusual move, Intel attempted to show its performance relative to what AMD will launch soon. Based on AMD’s presentations at the Hot Chips and Computex conferences, AMD has made some bold performance claims relative to Intel. In turn, Intel used this data to show Xeon 6P’s projected performance relative to Turin when the stack is tuned for Intel CPUs.

Again, I urge you to take these numbers and claims with a grain of salt. However, Intel’s approach with these comparisons speaks to its confidence in Xeon 6P’s performance relative to the competition.

Does Gaudi 3 Put Intel In The AI Game?

As mentioned above, we covered the specifications and performance of Gaudi 3 in great detail in an earlier research note. So, I will forego recapping those specs and get straight to the heart of the matter: Can Gaudi compete with Nvidia and AMD? The answer is: It depends.

From an AI training perspective, I believe Nvidia and to a lesser extent AMD currently have a lock on the market. Their GPUs have specifications that simply can’t be matched by the Gaudi 3 ASIC.

From an AI inference perspective, Intel does have a play with Gaudi 3, showing significant price/performance advantages (up to 2x) versus Nvidia’s H100 GPU on a Llama 2 70B model. On the Llama 3 8B model, the advantage fell to a 1.8x performance per dollar advantage.

This means that, for enterprise IT organizations moving beyond training and into inference, Gaudi 3 has a role, especially given the budget constraints many of those IT organizations are facing.

More importantly, Gaudi 3 will give way to “Falcon Shores” over the next year or so, the first Intel GPU for the AI (and HPC) market. All of Intel’s important work in software will move along with it. Why does that matter? Because organizations that have spent time optimizing for Intel won’t have to start from scratch when Falcon Shores launches.

While I don’t expect Falcon Shores to bring serious competition to Nvidia or AMD, I do expect it will lead to a next-generation GPU that will properly put Intel in the AI training game. (It’s worth remembering that this is a game in its very early innings.)

Xeon 6P Gives Intel Something It Needed

Intel needed to make a significant statement in this latest datacenter launch. With Xeon 6P, it did just that. From process node to raw specs to real-world performance, the company was able to demonstrate that it is still a leader in the datacenter market.

While I expect AMD to make a compelling case for itself in a few weeks with its launch of Turin, it is good to see these old rivals on more equal footing. They make each other better, which in turn delivers greater value to the market.