Amazon Web Services held its annual re:Invent customer event last week in Las Vegas. With over 200 analysts in attendance, the event focused on precisely what one would expect: AI, and how the largest cloud service provider on the planet is building infrastructure, models, and tools to enable AI in the enterprise.

While my Moor Insights & Strategy colleagues Robert Kramer and Jason Andersen have their own thoughts to share about data and AI tools (for example here), this research note will explore a few areas that I found interesting, especially regarding AWS chips and the Q Developer tool.

The AWS Silicon Evolution

AWS designs and builds a lot of its own silicon. Its journey began with the Nitro System, which handles networking, security, and a bit of virtualization offload within an AWS-specific virtualization framework. Effectively, Nitro offloads a lot of the low-level work that connects and secures AWS servers.

From there, the company moved into the CPU space with Graviton in 2018. Since its announcement, this chip has matured to its fourth generation and now supports about half the workloads running in AWS.

AWS announced Inferentia and Trainium in 2019 and 2020, respectively. The functionality of each AI accelerator is easy to deduce from its name. While both pieces of silicon have been available for some time now, we haven’t heard as much about them—especially in comparison to the higher-profile Graviton. Despite not being as well-known as Graviton, Inferentia and Trainium have delivered tangible value since their respective launches. The first generation of Inferentia focused on deep learning inference, boasting 2.3x higher throughput and 70% lower cost per inference compared to the other inference-optimized instances on EC2 at the time.

Inferentia2 focused on generative AI (Inf2 instances in EC2) with a finer focus on distributed inference. Architectural changes to silicon, combined with features such as sharding (splitting models and distributing the work), allowed the deployment of large models across multiple accelerators. As expected, performance numbers were markedly higher—including 4x the throughput and up to 10x lower latency relative to Inferentia1.

Trainium2, from the Chip to the Cluster

Based on what we’ve seen through the Graviton and Inferentia evolutions, the bar has been raised for Trainium2, which AWS just released into general availability. The initial results look promising.

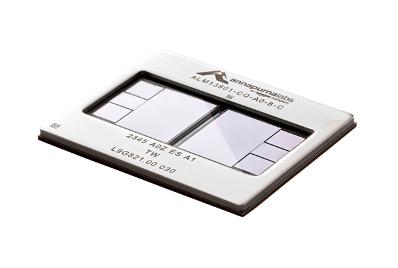

As it has done with other silicon, the Annapurna Labs team at AWS has delivered considerable gains in Trainium2. While architectural details are scant (which is normal for how AWS talks about its silicon), we do know that the chip is designed for big, cutting-edge generative AI models—both training and inference.

Further, AWS claims a price-performance advantage for Trainium2 instances (Trn2) of 30% to 40% over the GPU-based EC2 P5e and P5en instances (powered by NVIDIA H200s). It is worth noting that AWS also announced new P6 instances based on NVIDIA’s hot new Blackwell GPU. A point of clarification is worth a mention here. Unlike Blackwell, Trainium2 is not a GPU. It is a chip designed for training and inference only. It is important to note this because such chips, though narrow in functionality, can deliver significant power savings relative to GPUs.

The Trainium2 accelerator delivers 20.8 petaflops of compute. For enterprise customers looking to train and deploy a large language model with billions of parameters, these Trn2 instances are ideal, according to AWS. (A Trn2 instance bundles 16 Trainium2 chips with 1.5TB of high-bandwidth memory, 192 vCPUs, and 2TB RAM.)

Going up the performance ladder, AWS also announced Trainium2 UltraServers—effectively 64 Trainium2 chips across four instances to deliver up to 83.2 petaflops of FP8 precision compute. These chips, along with 6TB of HBM and 185 TBps of memory bandwidth, position the UltraServers to support larger foundational models.

To connect these chips, AWS developed NeuronLink—a high-speed, low-latency chip-to-chip interconnect. For a parallel to this back-end network, think of NVIDIA’s NVLink. Interestingly, AWS is part of the UALink Consortium, so I’m curious as to whether NeuronLink is tracking to the yet-to-be-finalized UALink 1.0 specification.

Finally, AWS is partnering with Anthropic to build an UltraCluster named Project Rainier, which will scale to hundreds of thousands of chips to train Anthropic’s current generation of models.

What does all of this mean? Is AWS suddenly taking on NVIDIA (and other GPU players) directly? Is this some big move where AWS will push—or even nudge—its customers toward Trn2 instances instead of P5/P6 instances? I don’t think so. I believe AWS is following the Graviton playbook, which is simple: put out great silicon that can deliver value and let customers choose what works best for them. For many, having the choice will mean they continue to consume NVIDIA because they have built their entire stacks around not just Hopper or Blackwell chips, but also NVIDIA software. For some, using Trn2 instances along with Neuron (the AWS SDK for AI) will be the optimal choice. Either way, the customer benefits.

Over time, I believe we will see Trainium’s adoption trend align with that of Graviton. Yes, more and more customers will select this accelerator as the foundation of their generative AI projects. But so too will many for Blackwell and the NVIDIA chips that follow. As this market continues to grow at a torrid pace, everybody wins.

It’s worth mentioning that AWS also announced Trainium3, which will be available in 2025. As one would expect, this chip will yet again be a significant leap forward in terms of performance and power efficiency. The message being sent is quite simple—AWS is going to deliver value on the enterprise AI journey, and the company is taking a long-term approach to driving that value.

Q Developer and Modernization

One of the other areas that I found very interesting was the use of AI agents for modernizing IT environments. Q is an AWS generative AI assistant that one could consider similar to the more well-known Microsoft Copilot. Naturally, Q Developer is a tool for creating and managing Q assistants.

While Q Developer is interesting for several reasons, digital transformation is the area of assistance I found most compelling. At re:Invent, AWS rolled out Q Developer to modernize three environments: Microsoft .NET, mainframe applications, and VMware environments. In particular, the VMware transformation is of great interest to me as there has been so much noise about VMware in the market since its acquisition by Broadcom. With Q Developer, AWS has built agents to migrate VMware virtual machines to EC2 instances, removing dependencies. The process starts by collecting (on-premises) server and network data and dropping it into Q Developer. Q Developer then outputs suggestions for migration waves that an IT staff can accept or modify as necessary. This is followed by Q Developer building out and continuously testing an AWS network. And then the customer selects the waves to start migrating.

While Q Developer is not going to be perfect or remove 100% of the work for decoupling from VMware, it will help get through some of the most complex elements of migrating. This can save months of time and significant dollars for an enterprise IT organization. This is what makes Q Developer so provocative and disruptive. I could easily see the good folks at Azure and Google Cloud looking to build similar agents for the same purpose.

Q Developer for .NET and mainframe are also highly interesting, albeit far less provocative. Of the two, I think the mainframe modernization effort is quite compelling as it deconstructs monolithic mainframe applications and refactors them into Java. I like what AWS has done with this and can see the value—especially given the COBOL skills gap that exists in many organizations. Or, more importantly, the skills gap that exists in understanding and attempting to run both mainframe and cloud environments in parallel.

With all this said, don’t expect Q Developer to spell the end of the mainframe. Mainframes are still employed for a reason—70 years after the first commercial mainframes, and 60 years after IBM’s System/360 revolutionized the computing market. It is not because they are hard to migrate away from. The reason is tied to security and performance, especially in transaction processing at the largest scales. However, Q Developer for mainframe modernization is pretty cool and can certainly help, especially as a code assistant for a workforce that is trying to understand and maintain COBOL code written decades ago.

Final Impressions

I’ve been attending tech conferences for a long time. My first was Macworld in 1994. Since then, I’ve attended every major conference at least a few times. AWS re:Invent 2024 was by far the largest and busiest conference I’ve attended.

While there was so much news to absorb, I found these announcements around Trainium and Q Developer to be the most interesting. Again, my Moor Insights & Strategy colleagues Robert Kramer and Jason Andersen, along with our founder and CEO Patrick Moorhead, will have their own perspectives. Be sure to check them out.