Intel unveiled its 5th Gen Xeon Scalable CPU, codenamed Emerald Rapids, to the world last week, emphasizing its performance, power, and security. At the New York City launch event, the company focused on AI as the hero workload. I am starting to think this AI focus was a requirement for every tech company in 2023.

While GPUs and accelerators have dominated the news for the last few weeks, Intel’s launch of Emerald Rapids stands out for several reasons. In the following sections, I’ll explain why this launch was significant and go into greater detail.

The significance of Emerald Rapids

When Intel CEO Pat Gelsinger returned to the company a few years ago after a successful run as the CEO of VMware, he had to tackle a couple of fundamental challenges. First, the company had lost its technology leadership to its archrival AMD, which had entirely transformed itself and returned from the brink of failure. Secondly, Intel had lost the one thing it had always been known for—consistent execution against its product and manufacturing roadmap.

To respond to the perception of Intel’s newfound inability to deliver products as defined and as scheduled, Gelsinger made a very bold commitment in 2021 to advance by five process nodes in four years. The goal was to catch up to and eventually leapfrog the manufacturing prowess of other chip giants such as TSMC and Samsung while doing the same from a chip design standpoint.

To put the boldness of this commitment in perspective, designing and manufacturing CPU generations is measured in years. A new process node routinely takes two full years to implement, and that’s if you’re pressing hard. Under Gelsinger’s plan, Intel is committed to shrinking this to less than a year per generation.

Fast-forward to late 2022, and the company launched its much anticipated (and delayed) 4th Gen Xeon Scalable processor, codenamed Sapphire Rapids. At that launch, Intel’s strategy became clear to an analyst like me. Rather than focus on top-level specifications such as core counts, the engineers at Intel focused on accelerating real-world performance. This means thinking about which factors contribute to application performance and latency, then building silicon to focus on offloading these functions into acceleration engines that can deliver fast and reliable real-world performance. (You can read my coverage of Sapphire Rapids here.)

With Emerald Rapids, Intel continues along the path of using architectural design elements that deliver real-world application performance improvements. While the company has focused heavily on AI for Emerald Rapids (and at this point, it seems almost a dereliction of marketing duty not to focus on AI), virtually every workload should see improvements over the previous generation because of the capabilities detailed in the next section.

De-lidding the CPU — Emerald Rapids technology overview

Emerald Rapids is built on the Intel 7 process node. The three areas that Intel seems to have dialed in on when designing the chip are performance, power, and security.

While power and performance are a natural byproduct of a process shrink (e.g., from 10nm to 7nm), the company’s ability to deliver significant gains across all three vectors is still impressive.

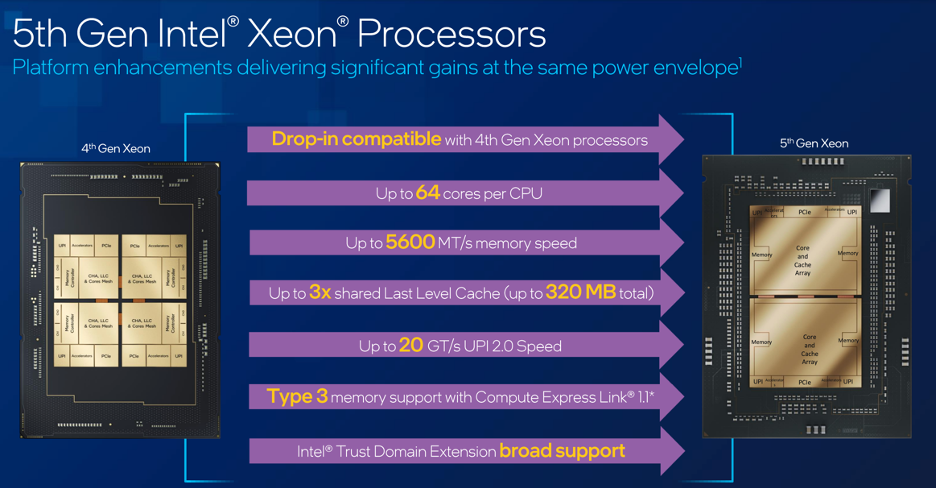

Application performance is dependent on several factors: the design of cores, how fast data can move from memory to cores, how quickly data can move across compute tiles, and how far data resides from the CPU. In each of these areas, Emerald Rapids delivers measurable improvements over Sapphire Rapids, as detailed below:

- While the core count improved modestly from Sapphire Rapids to Emerald Rapids, the underlying core (Raptor Cove) delivers improved performance over its predecessor (Golden Cove).

- Emerald Rapids moved from a four-tile architecture to a two-tile, 32-core architecture. This change enables better application performance as it allows bigger applications and VMs not to need to access resources on other dies.

- The UltraPath Interconnect (UPI) connecting dies have been given a significant speed boost (20 GHz versus 16 GHz in Sapphire Rapids), enabling faster data movement. So, if an application does need to access resources on the second tile, the speed at which it does that is considerably faster.

- Emerald Rapids supports 5.6 GHz DDR5 memory, while Sapphire Rapids supports up to 4.8 GHz.

- L3 cache saw a significant upgrade to 320 MB in Emerald Rapids. Sapphire Rapids ships with 112 MB of L3 cache.

When looking at these enhancements, one can see how Intel achieved such overall performance gains from a CPU running at the same power envelope and on the same process node.

Acceleration matters

As with any CPU, IT folks ultimately care about how fast their business-critical workloads run. So, when the marketing folks are done promoting a synthetic benchmark that shows one CPU embarrassingly outperforming another one, IT organizations will typically bring in servers with both CPUs to see how they perform in the real world. I did it when I was an IT executive, and I believe it’s an exercise that IT organizations should continue to perform regularly.

With this said, I like that Intel’s approach with Emerald Rapids continues what the company focused on with its predecessor: delivering acceleration that benefits the actual workloads that enterprise IT organizations and cloud providers are deploying at scale. AI, analytics, database, web, and cloud-native applications are expanding across the modern data estate from on-prem to the cloud and the edge. The related performance requirements dictate some level of acceleration—acceleration that Emerald Rapids can deliver through its engines.

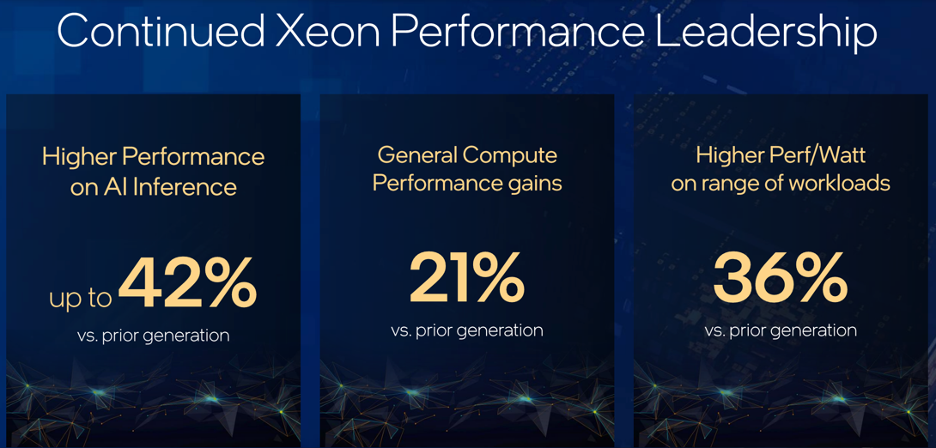

As shown in the chart below, the benefit of acceleration engines combined with the architectural enhancements in Emerald Rapids has led to significant performance gains for target workloads.

While the focus of every organization seems to be on AI—where Emerald Rapids shows up to a 1.4x performance gain relative to Sapphire Rapids—the chart shows equally impressive performance boosts across all workloads.

What about power and security?

Power and security were also key focus areas for the team designing Emerald Rapids. On the power front, Emerald Rapids delivers about 1.34x the performance per watt relative to Sapphire Rapids, along with a savings of about 100 watts when the CPU sits idle. These are significant measurements for IT organizations looking to drive sustainability gains (and cost savings).

On the security front, Intel strengthened its offering by complementing its application-level security enabled by SGX with virtual machine/environmental security enabled by trusted domain extensions (TDX). While SGX builds a secure enclave around an application and data, TDX extends this to the virtual machine environment, delivering broader security and environmental isolation. Based on the performance numbers the company shared, Emerald Rapids appears to achieve this with minimal latency.

How does Emerald Rapids compare competitively?

For most people who follow the chip wars, the first company to think of as a competitor for Xeon is AMD with its EPYC CPU. If you’re one of those people, you’re likely aware that EPYC still enjoys an advantage in top-level specifications.

But here’s the question: Does a top-end count of 96 cores versus 64 cores matter to an enterprise IT organization when it’s deploying servers that have only 16 or 32 cores per socket? I don’t believe so.

Here’s my advice to enterprise IT executives. Don’t rely on published benchmarks. Instead, rely on the performance numbers you see as you run your workloads on these servers. And be sure to perform a cost-benefit analysis on that basis.

A more interesting discussion may be around Xeon and EPYC versus the Arm chips deployed in the cloud, whether from Ampere or internally designed (i.e., Graviton and Cobalt from AWS and Azure, respectively). The competitive landscape becomes much more interesting as these Arm-based servers expand into the two-socket space (read my coverage here).

Closing thoughts

Intel’s launch of its 5th Gen Xeon Scalable Processor demonstrates that the company understands it must deliver real value to the market to maintain its competitive position. While Sapphire Rapids did hit the market late, it showed how the company would deliver that value—higher performance through clever architecture and better acceleration, power, and security. Emerald Rapids continues on the same path.

These less flashy architectural enhancements have led to considerable performance gains in Emerald Rapids. I’m interested to see what 2024 looks like as the company continues along its five nodes in four years strategy.

One thing is for sure—the chip wars have heated up again, and it will be fun to see how they unfold.