As AI hype has taken hold in the mainstream, people are beginning to make assumptions that are not necessarily true. For instance, AI is a lot more than generative AI or natural language processing, so instead of calling it “AI hype,” it might be better characterized as “GenAI hype” or “NLP hype.” Another pervasive theme is that when it comes to large language models, bigger is better. Also not true. Last week when I attended IBM Think 2024, I collected a few data points suggesting that smaller, purpose-built AI models are quite useful—and better for certain use cases.

IBM and Red Hat Open Up Granite LLMs

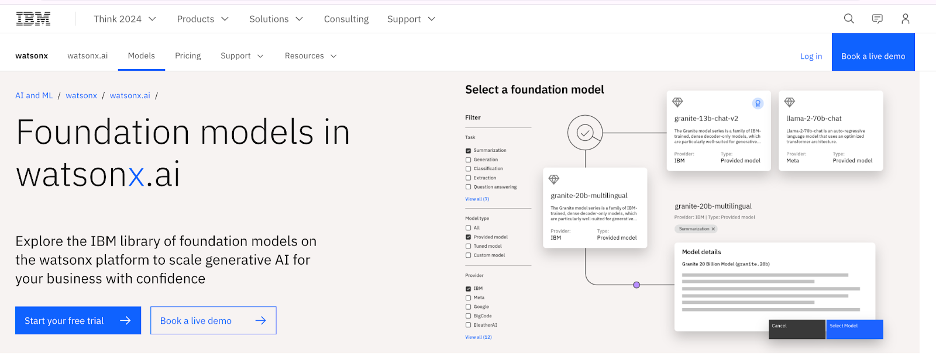

Earlier this month, IBM and its Red Hat unit announced the open-sourcing of their Granite foundation and embedded models. (For more on Red Hat, take a look at my recent research note on the Red Hat Summit.) Open-sourcing these models makes it easy for anyone to download and possibly modify a pre-built LLM to use for an AI-based application. IBM also provided the models under an Apache 2.0 license. This is a big deal because it means that IBM has mitigated common privacy and indemnity concerns that can accompany some open source projects. While the Red Hat announcement was big news in its own right, IBM was also able to provide more clarity at the Think conference about the technical implications of open-sourcing Granite.

The most important part of the announcement is that IBM released many models and not just a single model. More specifically, IBM released 18 models of different sizes for different use cases. The company is also partnering with other organizations such as Adobe and Mixtral, adopting an open AI model approach aimed at enabling multi-company collaboration and increasing transparency about the model’s inner workings.

How IBM’s Approach Helps Enterprise Customers

IBM’s packaging strategy is one of providing the right tools for the right job. I believe this multi-model approach is more powerful for enterprise customers than deploying the more consumer-oriented models (for instance ChatGPT and Google Gemini) in common use today. There are a couple of reasons for this.

First, the size of any given model will impact its performance and cost. With Granite, the customer can elect to use a smaller version of a model in pre-production scenarios for development and testing before moving to a larger model in production. This will save time and money in the development process. For what it’s worth, IBM also shared that the 8-billion-tokens model size is currently something of a sweet spot in terms of performance, cost, and end-user precision. (For context, IBM has model sizes of 3, 8, and 20 billion tokens.) Naturally, that should be taken as a point-in-time statement because the performance of hardware, GPUs and other silicon, and software are all moving up rapidly. But it is notable because an 8-billion-token model is orders of magnitude smaller than the marquee numbers you will hear from the makers of consumer-oriented LLMs.

The other factor is use cases, where the model’s specific algorithms and training will impact how the model should be used. This is in contrast to many vendors that are pushing their models for general-purpose use. While these giant models are highly versatile, they are harder to train, use, and even maintain. On the maintenance angle, IBM also claims that it will continue to update these Granite models into the future, potentially as often as weekly depending on the model. Therefore, users will not need to wait long to get new versions as the models improve.

The Advantages of Purpose-Built LLMs

This all sounds great, but real-world usage can be a different story. To address this point, IBM provided a very interesting bit of news to support its strategy. One of the Granite models is being used for an upcoming product called IBM watsonx Code Assistant for Java. This product is similar to other coding assistants available today, but it is specifically trained for the Java programming language by IBM’s own team of Java experts. The company claims a 60% boost in Java coding productivity using this tool. This is significantly higher than the average 30% to 40% increase seen with other general-purpose coding assistants in the market. IBM attributes this to the purpose-built nature of the model and its highly specific training to support the code assistant.

Interestingly enough, Oracle has also announced its intention to release a Java code assistant at some point. (Here is a link to my analysis of Oracle’s announcement about this.) Oracle, too, is saying that its code assistant will have better performance than ones based on general-purpose models. When I spoke to Oracle about this recently, my contacts expressed their belief that the higher performance is the product of using a better-optimized and -trained model. This makes intuitive sense, given that Oracle and IBM likely have the deepest understanding of Java in the industry. Short of having an industry-certified benchmark, reports of similar outcomes for two independent but similar competitors do suggest a trend.

For the Right Use Cases, Think Small for Rapid Success

Enterprises seeking to create rapid business value from AI may want to start thinking smaller. It appears that solutions based upon well-sized models are faster, cheaper, and—with the right training—more precise. This also supports the age-old notion that to make a business solution impactful and sticky, it ought to be tailored for a specific business problem and usable for a well-defined set of stakeholders.

Finally, for anyone concerned about AI taking their jobs, your specialized knowledge is important for helping make an AI model the best it can be. The large general-purpose LLMs are trained by consuming as much data as possible, which is sort of analogous to memorizing a dictionary. By contrast, these more specifically focused models are “taught” by experts in a specific area. This process is more like a class being taught by an experienced professor. For a proper base of knowledge in a given domain, you ultimately need both the dictionary and the teacher, not one or the other. That’s why I think smaller, purpose-built AI is the answer in some cases—and why human experts aren’t about to be replaced.