The annual Computex trade show in Taiwan almost always produces lots of news from the most prominent silicon providers. This year was no different as the CEOs of NVIDIA, AMD, Intel, and Arm took the main stage to demonstrate their companies’ latest innovations and products.

As one would expect, each company’s messaging and positioning highlighted its strength in enabling AI, from client to datacenter. I’ve taken a while to write this post because I wanted to watch the keynotes one more time and see what kind of impact some of these messages would have throughout the ecosystem of OEMs, ODMs, and ISVs. In the following sections, I’ll recap what I heard and thought about from the show and what it all means for the datacenter market moving forward.

NVIDIA Continues to Build Moats and Walls

NVIDIA currently owns the AI space, in terms of both market share and awareness. Its Hopper and Blackwell GPUs, along with its Arm-based Grace CPU, are foundational to virtually every cloud provider’s AI training offerings, and NVIDIA has even used its financial power to fund and own part of the nascent GPU cloud market (The company is an investor and supplier to investor-darling CoreWeave).

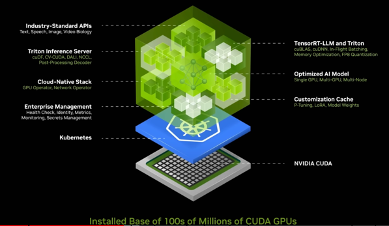

In addition to making GPUs that dominate the AI market, the company has built a software stack that extends from the platform level (CUDA) all the way up to industry-specific models. As CEO Jensen Huang describes it, the company has created an AI factory.

NIM—NVIDIA Inference Microservices—is perhaps the company’s most significant software solution since CUDA was introduced in 2006. While CUDA provides a framework and model for developers to write to for any NVIDIA GPU, NIM is where the company goes the last mile in enabling application developers to integrate GenAI into their applications quickly and easily. NIM contains trained models and inferencing engines packaged into a container. (It uses Triton Inference Service and Tensor RT for its inferencing engine.) This allows developers to deploy this container anywhere (on-prem, private cloud, public cloud) on their NVIDIA GPU of choice and simply point to it using an API call within their application.

For NIM to be successful, it requires broad ecosystem support, and of course NVIDIA has put a lot of effort into building those partnerships. The company has support from the usual suspects in the AI business (Hugging Face, Mistral, Meta, Stability AI, Adobe, etc.), and I’m sure this list will grow.

Many analysts and pundits would say that CUDA created a deep moat for NVIDIA relative to its competition, and I would agree. However, I think that NIM and the company’s enterprise AI initiative make up the castle walls that sit inside that moat. These tools harness the benefits of GenAI to deliver value to the enterprise—something that many organizations have struggled to realize.

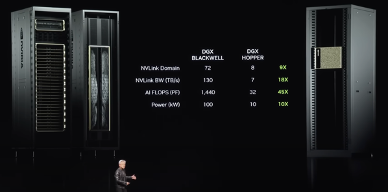

I would be remiss in not mentioning its Blackwell DGX—and what an impressive machine this is. The DGX system is a pod that contains 72 Blackwell GPUs paired with 36 Grace CPUs, all tied together via the company’s NVLink Switch and NVLink Spine. So, to developers and applications, this looks like one giant Blackwell GPU.

The result is what one would expect. Performance gains are off the charts compared to its predecessor (DGX Hopper), plus there is an increase in performance per watt.

While I expect NVIDIA to sell more of these products than it can ship, I don’t see customer applicability beyond hyperscalers and large enterprises. This level of performance will come at a prohibitive cost. I expect to see OEM-enabled MGX Blackwell platforms filling pipelines for customers downmarket from hyperscalers and large enterprises.

All in all, it was a strong delivery from Huang. While I didn’t see anything new announced from a datacenter perspective (all of those announcements were made at the company’s GTC event in March), he highlighted the company’s strengths quite effectively.

AMD Focuses on Datacenter Modernization, AI, and the Competition

Next up at GTC was AMD’s CEO, Dr. Lisa Su. While Su delivered a wide-ranging keynote that spanned from the client to the datacenter, I will focus on what she said regarding the company’s EPYC server CPU and Instinct AI accelerator.

Su started by giving an overview of the Zen 5 architecture, which is the basis for both client (Ryzen) and server chips. Architecturally, AMD has made improvements, including deploying a dual parallel pipeline for better branch prediction (better in terms of both accuracy and latency). AMD has also doubled Zen 5’s instruction and data bandwidth, both on the front end and from the cache to the floating point unit. Finally, the Zen team boosted AI performance considerably with AVX-512, a set of instructions to improve vector processing. All said, it looks like improvements in Zen 5 will lead to a 16% increase in IPC performance.

Before getting into the 5th Gen EPYC (codenamed “Turin”), Su took a well-deserved victory lap for EPYC’s success, as it now commands a 33% market share. From less than a single point of share to 33% in four CPU generations in a very competitive server market is nothing short of remarkable.

By now, most industry watchers know the specs on Turin: 192 cores (384 threads) built on 13 chiplets in a mix of 3nm and 6nm node processes. Memory and I/O remain at the latest generations—DDR5 and PCIe Gen5, respectively. Because these newer generations were built into 4th Gen EPYC, Turin becomes drop-in compatible, with no new motherboard design required. This makes life easier for server manufacturers and IT customers alike.

Su began her datacenter pitch by focusing on modernization with EPYC. I like that she did this. All the hype in today’s world is AI-focused, but in reality, the IT estate is much bigger than an AI cluster (even if that AI cluster is more expensive). The story for modernization is what one would expect: most infrastructure populating the enterprise datacenter is five years old (or so). When replacing that infrastructure with servers powered by Turin processors, consolidation ratios are on the order of 5:1, with an 80% reduction in rack space and a 65% reduction in power consumption. This is in comparison to a 2nd Gen Xeon-based server (a fair comparison as Intel dominated the enterprise datacenter five or so years ago).

Turning to AI, the Instinct value prop was front and center, with a big emphasis on AMD’s hardware engineering. MI325X, the newest member of the Instinct family, boasts a richness of high-bandwidth memory and memory bandwidth (6TB/s), which Su claims outperforms the most powerful NVIDIA GPU.

Further, AMD committed to delivering a new accelerator model every year. The MI325 lands in 2024, the MI350, built on the company’s CDNA 4 architecture, will hit the market in 2025, and the MI400, built on what was referenced as CDNA Next, will launch in 2026.

Like NVIDIA did during its turn on stage, Su spent some time touting the advancements AMD has made in enabling the Instinct ISV ecosystem through its ROCm software and its partnerships. Ecosystem support is going to be critical for Instinct’s success beyond the hyperscaler market. Without servers, rich software support, and a strong go-to-market in services and customer support, Instinct will struggle to find broad adoption in the enterprise.

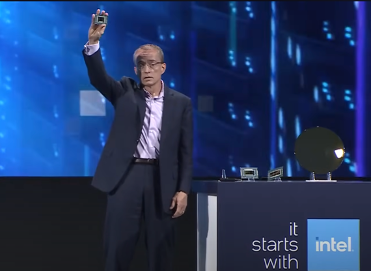

Intel Launches a Datacenter Refresh and Improves Gaudi Economics

After watching AMD’s and Intel’s keynotes back-to-back, I had to chuckle at how eerily similar CEO Pat Gelsinger’s keynote was to Dr. Su’s. I am certain this is a complete coincidence and evidence of how vital sustainability through modernization is to the market.

Gelsinger started his keynote with a fun factoid: Intel has sold 130 million Xeon CPUs. Yes, 130 million Xeons have been deployed in datacenters around the world. One marvels at how Intel built such an extensive hardware, software, and channel partner ecosystem to deliver that number. Another interesting point made was that in today’s market, 60% of an enterprise’s applications run in the cloud, while 80% of data resides on-prem.

As mentioned, modernization was top of mind, with the Xeon 6 E-core being the ideal building block. This core (codenamed “Sierra Forest”) was designed for efficient performance. The first Xeon 6 platform supporting the E-core will boast 144 cores and a richness of memory and I/O to keep all of these cores fed and working. In early 2025, an AP (advanced performance) version will launch, supporting up to 288 cores on a single socket.

Intel’s solution for higher-performing workloads is the Xeon 6 P-core. This core (codenamed “Granite Rapids”) is designed for maximum performance, with all of the acceleration engines enabled to support out-of-the-box acceleration of AI and HPC. Granite Rapids SP (standard performance) will ship in Q3 of 2024, while its AP variant will ship in Q1 of 2025.

Like AMD, Intel boasted considerable savings when looking at Xeon 6 relative to its second generation. And like AMD, the benefit of modernization was dramatic, with a claim of 6 times the consolidation ratio and 2.6 times the performance per watt. It is clear that Intel has found its rhythm with Xeon 6 and believes it can stem the tide of AMD’s gains. Undoubtedly, the company has a product that is more than competitive.

Turning to Intel’s Gaudi AI accelerators, the story is about performance and value. While Intel has boasted strong performance numbers for Gaudi through the benchmarks of organizations such as MLCommons (MLPerf), I think the biggest story for any AI solution is whether enterprise IT organizations can deploy it and realize value. Cost is a significant element of this. At Computex, Gelsinger revealed Gaudi 2 kits at one-third the price of the Hopper GPU, with Gaudi 3 at two-thirds the cost. These are significant savings.

Further, Intel has done a strong job of building a server ecosystem for Gaudi, with vendors such as Dell, Lenovo, Supermicro, Asus, Wistron, and Gigabyte supporting it. This will be critical for enterprise adoption. Interestingly, HPE was missing from Intel’s impressive list of server partners. This is quite surprising. However, it aligns with what I saw at HPE Discover, where the company seemed almost exclusively married to NVIDIA for its AI strategy. This could be an opportunity for HPE’s competitors to find entry into an otherwise locked account.

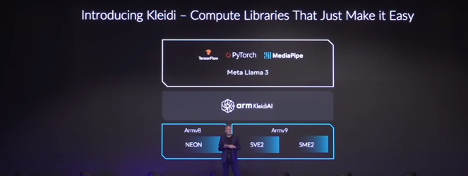

Arm Introduces the Kleidi Compute Libraries

Arm CEO Rene Haas covered quite a bit of ground in his keynote. This makes sense as the company’s IP portfolio touches virtually every market segment. However, one thing stood out as I listened: the importance of the software (ISV) ecosystem. Haas seems acutely aware of how vital the ISV ecosystem is to his company’s fortunes in every area.

In line with this, Haas announced Kleidi, a library that automatically maps AI frameworks to advanced instructions in the Arm architecture. This makes it easier for developers to take advantage of these significant capabilities.

Kleidi is the kind of tool that should prove to be significant for the Arm market. By removing the complexity associated with optimizing AI-driven applications, Arm is making its architecture a preferred choice for application developers. And by delivering such substantial performance gains, Arm is making its platform compelling for all.

While Arm has carved out a good market position for itself with its performance-per-watt claims, Kleidi considerably expands on this value proposition. Although Neoverse-based platforms have previously resided exclusively in the cloud, I expect we will start seeing Arm find another home in the enterprise datacenter.

Final Thoughts

Each silicon vendor covered in this piece can point to Computex 2024 and claim some measure of victory. It was an event that demonstrated how dynamic this market is and how these four major players’ strategies align with and diverge from each other’s. Those who walked in favoring one vendor over another will have their biases validated. Conversely, those who went in with doubts about any vendor had to have had those doubts challenged.

My big takeaway from Computex revolves less around product competitiveness and more around company strategy and momentum. It is clear that we are in the most competitive silicon market we’ve seen in a long time—perhaps ever. The real key will be which companies can best activate their ecosystem partners to drive momentum in the marketplace. Until then, sit back and enjoy the ride.