AMD acquired graphics chip maker ATI in 2006. Since then, the company has been fighting an uphill battle to compete with NVIDIA in the datacenter graphics market. While several factors have contributed to this over time, the end result has been relatively straightforward: much like Intel in the CPU space before AMD launched EPYC, NVIDIA has owned the datacenter graphics space.

Fast forward to 2023, and AMD is in a different place. While NVIDIA has been busy dominating the AI market, AMD has quietly designed and built a legitimate competitor for datacenter accelerators with the MI300 series.

Is this when AMD finally breaks the NVIDIA stranglehold? What will it take to see these GPUs find momentum in both the cloud and enterprise markets? I’ll give my analysis in the following few sections.

How did we get here?

AMD currently has a tiny percentage of the datacenter GPU market—a market that is $45 billion today and is expected to grow to $400 billion in 2027. That works out to a 70% CAGR. Meanwhile, NVIDIA dominates this market.

While many understand this market dynamic, fewer understand how it came to be. When Lisa Su took the helm at AMD in late 2014, the organization faced fundamental challenges, from rebuilding its client business to figuring out how to re-enter and compete in the datacenter CPU market. Add to these factors the previously mentioned ATI GPU business, which had gone through a bit of churn since being acquired in 2006.

As Su and the team looked at rebuilding AMD, they could not fix every problem at once. Prioritizing the higher-volume CPU business proved to be smart, as we can see from the market share gains realized by both RYZEN and EPYC. With the CPU business stabilized, the company could dedicate more resources to its GPU/accelerator strategy. In 2020, AMD launched the first in its Instinct MI family (MI100) to compete in the HPC market. Three years later, the company has been able to deliver what I consider a continuation of its success in HPC and its first real competitive AI accelerator.

Instinct MI300 overview

The MI300, AMD’s third generation of the Instinct family, has two separate products: the MI300X GPU and the MI300A APU, or accelerated processing unit. While the MI300A can be seen supporting HPC supercomputer clusters such as Lawrence Livermore National Laboratory’s El Capitan, the MI300X is targeted at the AI market. Let’s dive into a little more detail on each chip.

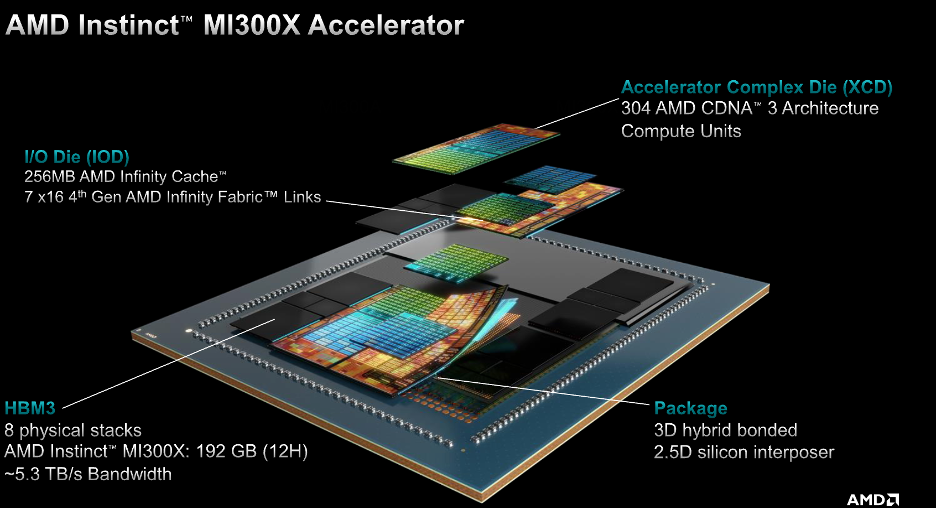

The MI300X comprises eight dies, housing 304 CDNA 3 compute units, sitting on four I/O dies with 256MB of L3 cache and the AMD Infinity Fabric interconnect. These compute units are supported by 192GB of HBM3 memory, with a throughput of 5.3 TB/s. At a 750-watt thermal design power (TDP), this chip’s specifications compare favorably to the NVIDIA H100, which has considerably less memory capacity and bandwidth.

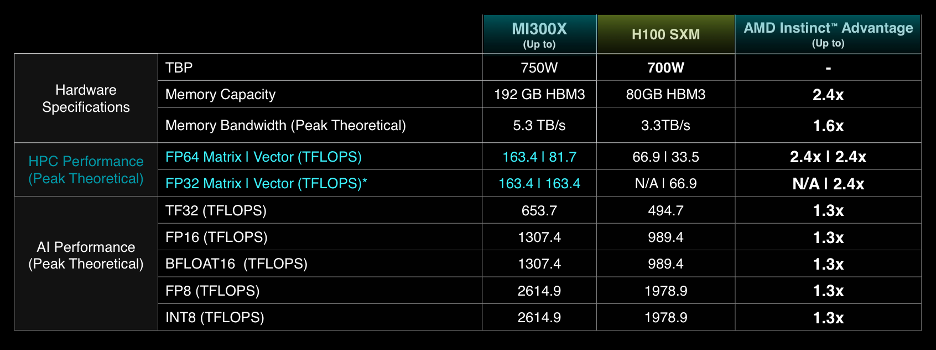

This richness of architecture translates into performance gains. The chart above shows that the MI300X delivers strong performance relative to the NVIDIA H100. When looking at AI performance in particular, the MI300X achieves about a 30% performance advantage in supporting the different data types such as FP8, FP16 and BFLOAT16. As the software community continues to optimize for this chip, we’ll see these AI performance numbers improve further.

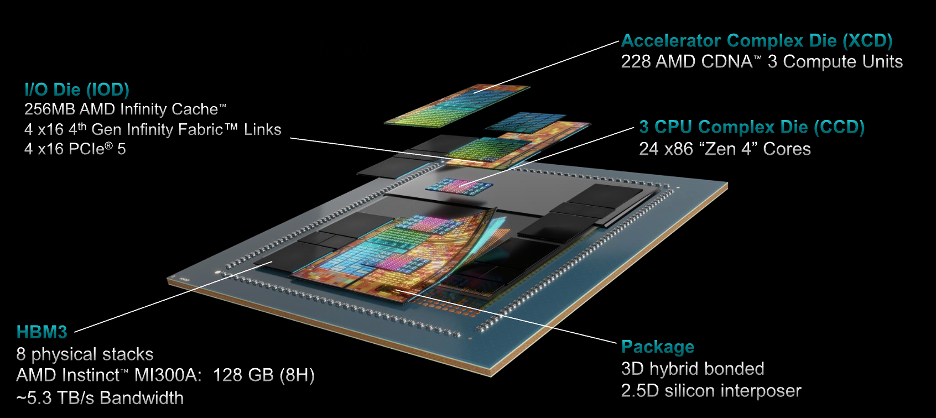

The MI300A, which already powers El Capitan and several other Top500 supercomputers, has CPUs and GPUs sharing the same platform. Built on the same four I/O dies are three 3rd Generation EPYC CPUs (24 cores total) along with six XCDs (GPU compute dies) populated with 228 CDNA 3 compute units (Think of CDNA as the GPU core architecture).

A significant new architectural change in the MI300A is the unified memory that the CPU and GPU can access. This unified access should reduce application latency considerably compared to separate memory, which requires data copying and moving to perform (as seen in the MI250).

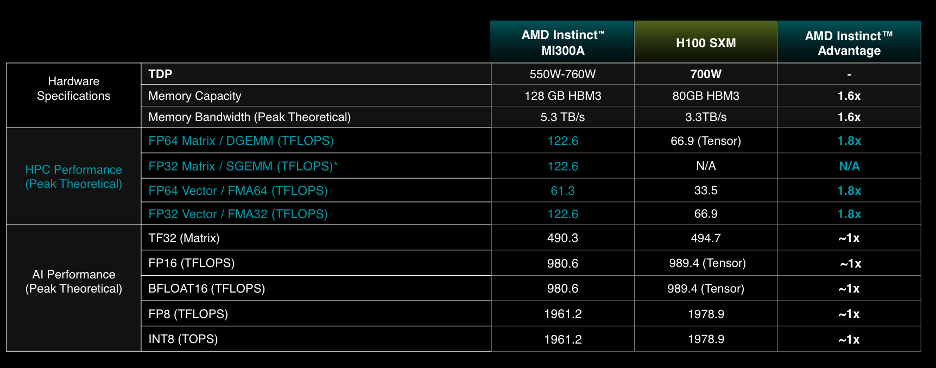

Based on the numbers that AMD has shared, these architectural changes in the MI300A deliver performance improvements. When compared NVIDIA, the HPC performance advantage is significant, ranging between 60% and 80%.

Though benchmarks are to be taken with a grain of salt, the spirit of the story is clear. AMD has delivered platforms that can perform as well as—or outperform—devices from the company that has had a lock on the GPU market.

More important than the published benchmarks were the on-stage proclamations at the MI300 launch event from customers and partners about the MI300X. You can consider a new chip to be fully validated when some of the largest cloud companies, such as Azure, Oracle Cloud, and Meta, discuss deploying your part at scale. Each cloud provider looks very carefully at performance, power, and cost. Components are adopted only if the technology meets their specific needs. And if specific TCO metrics aren’t achieved, purchase orders are never issued. Clearly, these cloud providers have seen the value of the new AMD chips.

I want to make a final note on performance. I believe we will see the AI performance numbers of the MI300X improve over time. These numbers demonstrate AMD’s software work in enabling the MI300 series. ROCm is the company’s software toolset that enables the programming of AMD GPUs. As the MI300 is broadly deployed in the cloud (Azure, Oracle, Meta) and by the developer community, performance will improve.

The AI silicon battle has just begun

While AMD has been delivering GPU technology to the market for some time, I believe we are just beginning to see the battle between competing silicon vendors in this niche legitimately heat up. Why is that? There are a few reasons:

- The MI300X is a beast — The specs on this chip are impressive. More importantly, the MI300X beats the specs of both the H100 and the recently announced H200 chip from NVIDIA. Will NVIDIA sit on its hands? Of course not. But the MI300X is a viable alternative to NVIDIA, not simply a second choice.

- Cloud adoption — Azure, Oracle, and Meta adopting the MI300 is critical for several reasons. First, the availability of instances from these cloud providers at what I suspect will be a significant price advantage should accelerate adoption. This adoption will lead to developers and enterprise customers expanding the pervasiveness of ROCm in the developer community. Second, the cloud providers will perform software optimizations in their frameworks and toolchains that translate into better AI performance. These optimizations will be contributed back into open source projects and the developer community, furthering this dynamic.

- Market dynamics — The market is starved of GPUs. From the cloud to the enterprise, the demand created by generative AI has not been fulfilled by what has been the default option—the H100. The MI300 doesn’t just fill this gap, but does it without sacrificing performance.

GPU competition is a good thing

When I covered the launch of AMD’s first EPYC in 2017, I spoke of the importance of building and enabling an ecosystem that spanned hardware, software, and sellers (i.e., the channel). When looking at the launch of the MI300, the same success factors prevail. The difference is that AMD has already done much of this heavy lifting, and its path to achieving velocity in the marketplace is much shorter.

I fully expect NVIDIA to respond to AMD and the MI300X, not simply with its upcoming H200 but through further optimizations and tweaks within the architecture and software stack. It has a distinct advantage with the broad adoption of its CUDA toolkit, and I anticipate that the company will exploit this advantage to its fullest extent. However, when writing in, say, PyTorch—that CUDA lock goes away. Regardless, expect that AMD will naturally respond in kind.

Given the market dynamics and the quality of the MI300X from an architectural perspective, AMD is well poised for success in the AI era. And that’s good for everybody—AMD, customers, and even NVIDIA.