AMD recently held an all-day event called Advancing AI Day that combined its efforts in the datacenter and cloud with client computing. If you’d like to get more in-depth about the AI-specific products it introduced, you can read my colleague Matt Kimball’s coverage of the MI300X and MI300A GPU and APU. AMD has demonstrated clear leadership with these new parts, which have given the company a lot more credibility in the AI hardware race.

Most of the action in AI is in the datacenter and the cloud, although over time this will shift towards the client with a more hybrid architecture. Eventually, developers will need to deploy smaller, more efficient AI models on-device to save on cost, using the cloud only for more complex tasks. Balancing smaller models on-device with larger ones in the cloud is a challenge that developers, OEMs, and OS vendors such as Microsoft will have to tackle for hybrid AI.

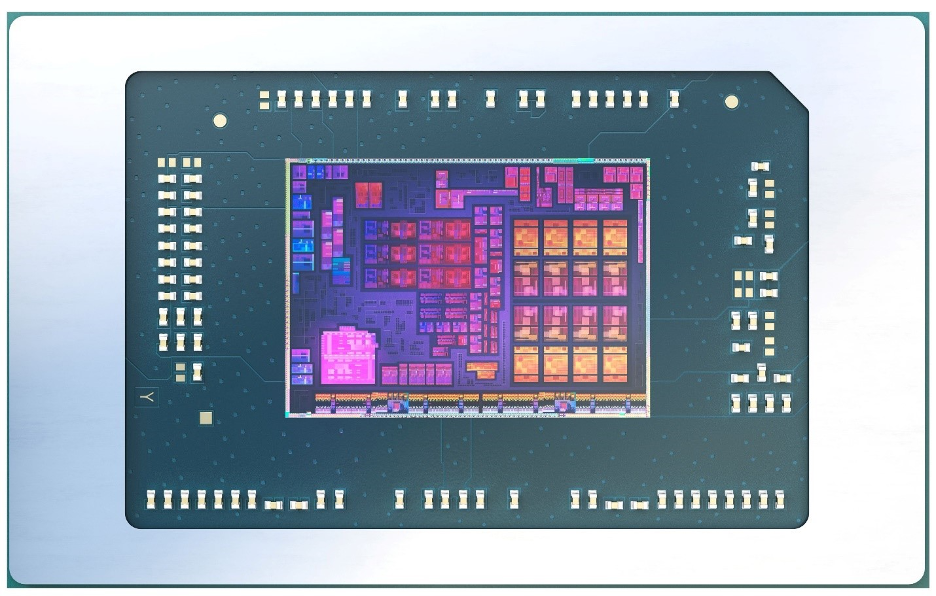

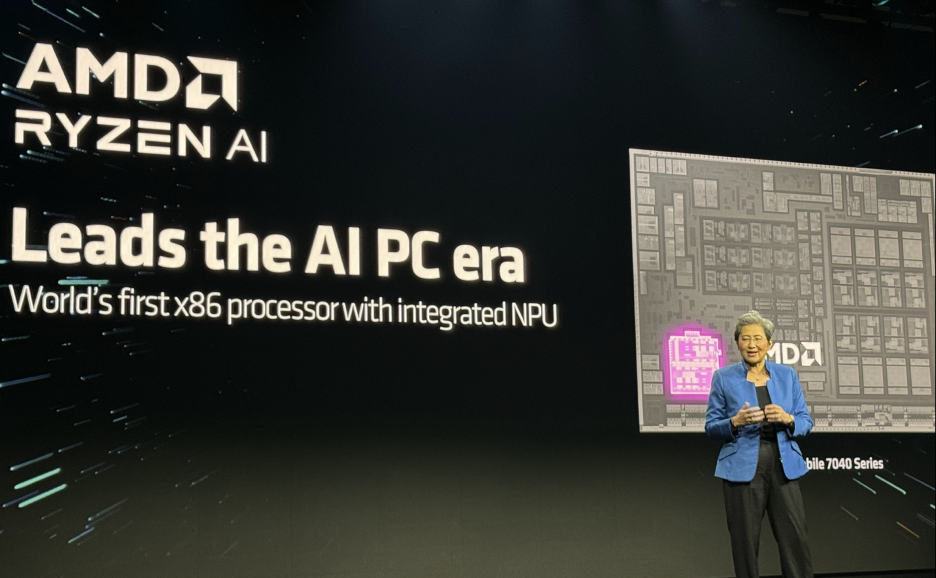

Towards the end of Advancing AI Day, AMD CEO Lisa Su talked about the company’s current AI chips, the Ryzen 7040 series, which have been shipping since the beginning of the year. AMD has focused its messaging to tell an end-to-end AI story, with a lot of the relevant IP coming from its $35 billion acquisition of Xilinx, which was completed in 2022. The NPU (neural processing unit) inside the Ryzen 7040 series is part of the “XDNA” architecture, which is derived from Xilinx IP, not to be confused with the “RDNA” used for graphics or “CDNA” architecture found in AMD datacenter accelerator products such as the MI300 series. AMD has made its end-to-end AI story much more credible thanks to the Xilinx acquisition.

AMD’s AI PC

At the event, AMD reiterated its claim of launching the AI PC in 2023 with the Ryzen 7040 series, a claim it bases on 50-plus chip designs that have already shipped millions of units across all the leading PC brands. This is a clear response to Intel’s campaigns around the AI PC and Qualcomm’s strong AI-focused PC messaging for its Snapdragon X product, which is coming next year. AMD does get to lay claim to introducing the first x86 processor with a dedicated integrated NPU, which Intel can’t do until it launches Meteor Lake, a.k.a. Intel Core Ultra, in the next few days.

That said, the uptake of AI applications that can take advantage of a dedicated NPU has mostly been limited to content-creation apps such as Adobe’s Photoshop, Premiere Pro, and Lightroom and Black Magic’s DaVinci Resolve. AMD is also engaging with many of the same developers that Intel claims to be working with on its AI PC acceleration program.

Ryzen AI Software

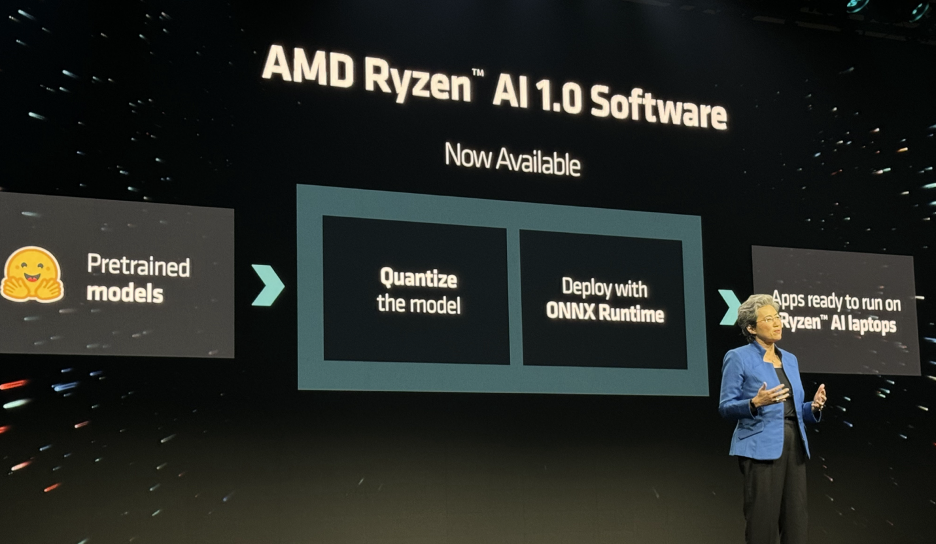

Ultimately, the entire industry needs to work together to enable developers to take advantage of the NPU on their processors, which is why we’re seeing such aggressive developer engagement from the likes of AMD, Intel, NVIDIA, and Qualcomm. At Advancing AI Day, AMD also announced the Ryzen AI Software Platform, which takes industry-standard trained models using TensorFlow, PyTorch, or ONNX and quantizes and converts them into the ONNX runtime for execution on a Ryzen AI-enabled processor.

I believe that AMD’s approach makes sense, but I would’ve liked to see the company have more AI partners talk in detail about how they are already engaging with AMD to bring more AI functions to the market. In fact, I think that if AMD wants to claim AI leadership on the PC, it should have taken more of an approach like it did on the datacenter side during Advancing AI Day, using OEMs and partners to validate its claims of leadership in the space.

AI Developers

I liked that AMD announced a pervasive AI design contest with three types of AI applications: robotics, generative AI, and PC AI. The first prize in each category is worth $10,000, but I wish that AMD would add a zero to that number to make it more attractive to developers. While I do think a contest encourages developers to create applications for these different categories, I believe that the best way to encourage developers is to create easy-to-use software and detailed documentation. Faster hardware is always nice, too—which is part of what AMD hopes to achieve with the next generation of Ryzen mobile processors.

New Ryzen 8000 Series AI

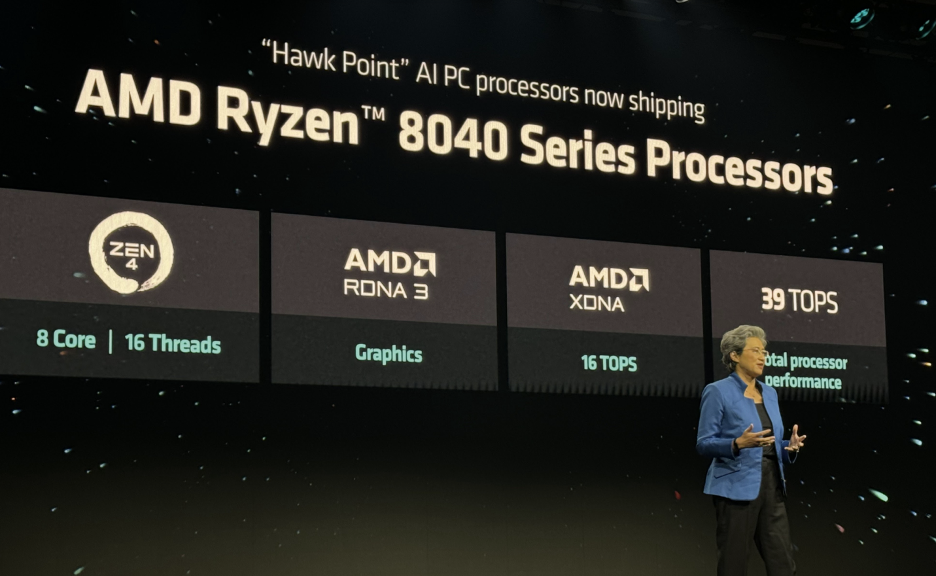

In the Ryzen 8040 series, AMD has upgraded the AI performance of its mobile processors by as much as 40% for vision models and Llama 2. AMD claims that the NPU performance of the Ryzen 8040, codenamed Hawk Point, has increased by 60% to 16 TOPS, contributing to a total system AI performance of 39 TOPS for the CPU and GPU in aggregate. The company hasn’t given details about how it increased the NPU TOPS by 60% using the same XDNA architecture, but hopefully we’ll get more details closer to CES next month.

In addition to sharing the increased AI performance in Hawk Point, AMD teased its platform for 2025 (shipping in late 2024) codenamed Strix Point. This is important because AMD claims to triple its AI NPU performance to 30 TOPS using the new XDNA 2 NPU, which, if all things stay equal, would put AMD’s total system performance at roughly 53 TOPS—and that’s assuming that the GPU and CPU don’t get faster, which in fact they probably will. I believe that AMD is teasing this level of performance because it wants investors to know that it will have something to compete with Intel and Qualcomm in 2025, which may end up being the year when the AI race really heats up.

One thing that I think will confuse AMD customers is that the entry-level Ryzen 3 and Ryzen 5 parts within the Ryzen 8040 series lack an NPU. While I am sure this is done to hit a certain price point for OEMs, I think that offering any next-generation parts without an NPU is a mistake. Consumers and developers are going to be looking at AMD’s Ryzen 8040 series as the default for delivering next-generation experiences, and having SKUs that can’t deliver an AI-accelerated experience is bound to create disappointment. This is especially true when you consider that lower-end parts are usually the ones that ship in the biggest volumes and are likely to impact the size of the install base the most. I appreciate that I am seeing the same NPU performance across the board from AMD, Intel, and Qualcomm in most of their chipsets, but I think it is an oversight by AMD not to include it in these entry-level parts.

Wrapping up

While AMD has positioned itself comfortably as an AI competitor to NVIDIA in the datacenter, it has struggled to get a firm hold of AI on the PC. It seems that AMD is not alone in these struggles, but I also believe that AMD is in a difficult spot given the current state of AI on the PC, especially with Microsoft shaping a lot of what that means today and into the future. Chip vendors including AMD, Intel, NVIDIA, and Qualcomm want to define the AI PC on their terms while juggling how Microsoft wants it to work. For example, at launch earlier this year Microsoft defined its AI Copilot as working only on PCs with the latest version of Windows 11, but it has since backtracked and said it will offer Copilot on Windows 10 as well, even though Windows 10 is approaching EOL and hasn’t shipped on new PCs in years.

While it is quite clear that AMD already has AI PC hardware in place today, it doesn’t really mean much without support from OEMs and developers looking to take advantage of that capability. The good news for AMD is that the AI space is moving extremely quickly; the need to improve the scale of AI applications and reduce cloud computing costs will likely drive developers to move to a more hybrid AI model that balances local computing with the cloud in a way that is seamless and invisible to the user.

AMD is clearly looking to reclaim the term “AI PC,” but I’m not sure anyone can claim it quite yet. It is abundantly clear, though, that there will be many different types of AI PCs, and it will be important for companies such as AMD and Microsoft to make it easier for developers to build on as many of them as possible. I suspect that 2023 will end up being regarded as the year of hype, while 2024 will be the year of seeding hardware and 2025 is when we will really start to see furious competition and broader applications for AI.